I'm a Ph.D. candidate at State Key Lab of CAD&CG, Zhejiang University, advised by Prof. Rui Wang and Prof. Yuchi Huo. During my Ph.D., I have been fortunate to collaborate with Prof. Evan Peng at the University of Hong Kong's Computational Imaging & Mixed Representation Laboratory (WeLight) and Dr. Yazhen Yuan at Tencent.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Research Interests

My research lies at the intersection of computer graphics and deep learning. I aim to achieve high-performance, high-quality intelligent rendering through efficient representations and generative techniques.

My doctoral work focuses on high-quality frame generation techniques, using data-driven methods to accelerate real-time rendering of 3D scenes with complex motion and diverse lighting conditions in interactive applications.

To date, I have worked on spatiotemporal supersampling for real-time rendering, neural light transport, and generative models for image synthesis.

>> I expect to complete my Ph.D. in June 2026 and am actively seeking postdoctoral positions starting in late 2026 or 2027. If you are interested in potential collaborations or opportunities, please feel free to contact me.

News

Publications ( * equal contribution, # corresponding author)

Selected

Consecutive Frame Extrapolation with Predictive Sparse Shading

Zhizhen Wu, Zhe Cao, Yazhen Yuan, Zhilong Yuan, Rui Wang, Yuchi Huo#

ACM Transactions on Graphics (SIGGRAPH Asia 2025)

A predictive sparse shading framework that jointly learns extrapolation error, flows, and frame synthesis to selectively shade dynamic regions while reusing generated pixels elsewhere.

Consecutive Frame Extrapolation with Predictive Sparse Shading

Zhizhen Wu, Zhe Cao, Yazhen Yuan, Zhilong Yuan, Rui Wang, Yuchi Huo#

ACM Transactions on Graphics (SIGGRAPH Asia 2025)

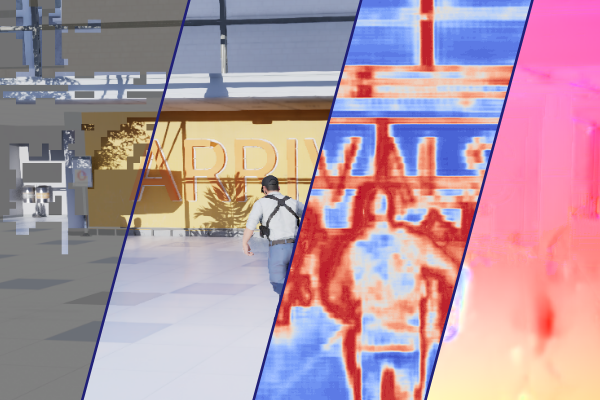

MoFlow: Motion-Guided Flows for Recurrent Rendered Frame Prediction

Zhizhen Wu, Zhilong Yuan, Chenyu Zuo, Yazhen Yuan, Yifan Peng, Guiyang Pu, Rui Wang, Yuchi Huo#

ACM Transactions on Graphics (Vol.44, No.22, 2025)

A temporally coherent motion representation and estimator that constructs motion-guided flows from recurrent latent features, enabling stable multi-frame extrapolation.

MoFlow: Motion-Guided Flows for Recurrent Rendered Frame Prediction

Zhizhen Wu, Zhilong Yuan, Chenyu Zuo, Yazhen Yuan, Yifan Peng, Guiyang Pu, Rui Wang, Yuchi Huo#

ACM Transactions on Graphics (Vol.44, No.22, 2025)

Adaptive Recurrent Frame Prediction with Learable Motion Vectors

Zhizhen Wu, Chenyu Zuo, Yuchi Huo#, Yazhen Yuan, Yifan Peng, Guiyang Pu, Rui Wang, Hujun Bao

SIGGRAPH Asia 2023 (Conferece Paper)

A rendered frame extrapolation framework that learns residual flow over rendered motion vectors for low-latency recurrent prediction, handling occlusions, dynamic shading, and translucent objects.

Adaptive Recurrent Frame Prediction with Learable Motion Vectors

Zhizhen Wu, Chenyu Zuo, Yuchi Huo#, Yazhen Yuan, Yifan Peng, Guiyang Pu, Rui Wang, Hujun Bao

SIGGRAPH Asia 2023 (Conferece Paper)

All Publications

StereoFG: Generating Stereo Frames from Centered Feature Stream

Chenyu Zuo, Yazhen Yuan, Zhizhen Wu, Zhijian Liu, Jingzhen Lan, Ming Fu, Yuchi Huo, Rui Wang

SIGGRAPH Asia 2025 (Conference Paper)

A stereo frame generation pipeline for VR that aligns binocular features into centered stream to upsample alternating low-resolution views into temporally stable high-quality stereo frames.

StereoFG: Generating Stereo Frames from Centered Feature Stream

Chenyu Zuo, Yazhen Yuan, Zhizhen Wu, Zhijian Liu, Jingzhen Lan, Ming Fu, Yuchi Huo, Rui Wang

SIGGRAPH Asia 2025 (Conference Paper)

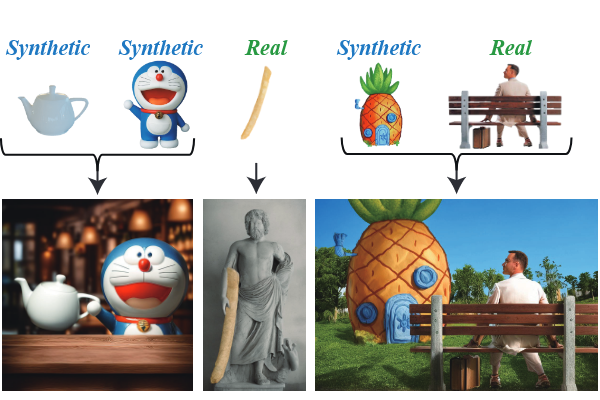

IntrinsicControlNet: Cross-distribution Image Generation with Real and Unreal

Jiayuan Lu, Rengan Xie, Zixuan Xie, Zhizhen Wu, Dianbing Xi, Qi Ye, Rui Wang, Hujun Bao, Yuchi Huo

ICCV 2025

A diffusion framework that takes intrinsic images (geometry, materials, and lighting) as controls to disentangle synthetic control signals from real-image content for controllable photorealistic image generation.

IntrinsicControlNet: Cross-distribution Image Generation with Real and Unreal

Jiayuan Lu, Rengan Xie, Zixuan Xie, Zhizhen Wu, Dianbing Xi, Qi Ye, Rui Wang, Hujun Bao, Yuchi Huo

ICCV 2025

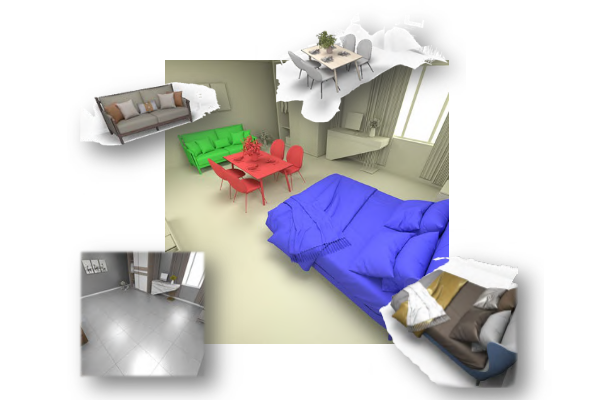

NeLT: Object-oriented Neural Light Transfer

Chuankun Zheng*, Yuchi Huo*, Shaohua Mo, Zhihua Zhong, Zhizhen Wu, Wei Hua, Rui Wang, Hujun Bao#

ACM Transactions on Graphics (Journal Paper)

An object-oriented neural light transfer representation that decomposes scene global illumination into per-object neural transport functions, enabling interactive global illumination for dynamic lighting, materials, and geometry without path-traced inputs.

NeLT: Object-oriented Neural Light Transfer

Chuankun Zheng*, Yuchi Huo*, Shaohua Mo, Zhihua Zhong, Zhizhen Wu, Wei Hua, Rui Wang, Hujun Bao#

ACM Transactions on Graphics (Journal Paper)